PDF] Steepest Descent and Conjugate Gradient Methods with Variable Preconditioning

Por um escritor misterioso

Last updated 19 abril 2025

![PDF] Steepest Descent and Conjugate Gradient Methods with Variable Preconditioning](https://d3i71xaburhd42.cloudfront.net/a0174a41c7d682aeb1d7e7fa1fbd2404e037a638/11-Figure8.1-1.png)

It is shown that the CG method with variable preconditioning under this assumption may not give improvement, compared to the steepest descent (SD) method, and a new elegant geometric proof of the SD convergence rate bound is given. We analyze the conjugate gradient (CG) method with variable preconditioning for solving a linear system with a real symmetric positive definite (SPD) matrix of coefficients $A$. We assume that the preconditioner is SPD on each step, and that the condition number of the preconditioned system matrix is bounded above by a constant independent of the step number. We show that the CG method with variable preconditioning under this assumption may not give improvement, compared to the steepest descent (SD) method. We describe the basic theory of CG methods with variable preconditioning with the emphasis on “worst case” scenarios, and provide complete proofs of all facts not available in the literature. We give a new elegant geometric proof of the SD convergence rate bound. Our numerical experiments, comparing the preconditioned SD and CG methods, not only support and illustrate our theoretical findings, but also reveal two surprising and potentially practically important effects. First, we analyze variable preconditioning in the form of inner-outer iterations. In previous such tests, the unpreconditioned CG inner iterations are applied to an artificial system with some fixed preconditioner as a matrix of coefficients. We test a different scenario, where the unpreconditioned CG inner iterations solve linear systems with the original system matrix $A$. We demonstrate that the CG-SD inner-outer iterations perform as well as the CG-CG inner-outer iterations in these tests. Second, we compare the CG methods using a two-grid preconditioning with fixed and randomly chosen coarse grids, and observe that the fixed preconditioner method is twice as slow as the method with random preconditioning.

![PDF] Steepest Descent and Conjugate Gradient Methods with Variable Preconditioning](https://d3i71xaburhd42.cloudfront.net/58140dd819ce6d049571e35c6520d6232f5980e4/5-Figure2-1.png)

PDF] Comparison of steepest descent method and conjugate gradient

![PDF] Steepest Descent and Conjugate Gradient Methods with Variable Preconditioning](https://media.springernature.com/lw346/springer-static/image/chp%3A10.1007%2F978-3-030-36468-7_13/MediaObjects/472714_1_En_13_Figc_HTML.png)

The Conjugate Gradient Method

![PDF] Steepest Descent and Conjugate Gradient Methods with Variable Preconditioning](https://i.ytimg.com/vi/zjzOYL4fhrQ/maxresdefault.jpg?sqp=-oaymwEmCIAKENAF8quKqQMa8AEB-AHUBoAC4AOKAgwIABABGHIgNyg-MA8=&rs=AOn4CLCrZjsBGBt2aQ1ILO_N3dNsba6p5A)

Preconditioned Conjugate Gradient Descent (ILU)

![PDF] Steepest Descent and Conjugate Gradient Methods with Variable Preconditioning](https://wikimedia.org/api/rest_v1/media/math/render/svg/597b67a21d90871aabd70a95b93c3a9558cebbed)

Conjugate gradient method - Wikipedia

![PDF] Steepest Descent and Conjugate Gradient Methods with Variable Preconditioning](https://miro.medium.com/v2/resize:fit:1248/1*RQ-XYcHV6iwnxT2Bc9yYeQ.png)

Descent carefully on a gradient!. In machine learning, gradient

![PDF] Steepest Descent and Conjugate Gradient Methods with Variable Preconditioning](http://hua-zhou.github.io/teaching/biostatm280-2017spring/slides/22-cg/coordinate_descent.png)

cg

![PDF] Steepest Descent and Conjugate Gradient Methods with Variable Preconditioning](https://ars.els-cdn.com/content/image/1-s2.0-S0024379516304104-gr001.jpg)

Preconditioned steepest descent-like methods for symmetric

![PDF] Steepest Descent and Conjugate Gradient Methods with Variable Preconditioning](https://media.springernature.com/m685/springer-static/image/art%3A10.1007%2Fs11590-022-01867-9/MediaObjects/11590_2022_1867_Figa_HTML.png)

A link between the steepest descent method and fixed-point

![PDF] Steepest Descent and Conjugate Gradient Methods with Variable Preconditioning](https://ars.els-cdn.com/content/image/3-s2.0-B9780124167025500028-f02-06-9780124167025.jpg)

Conjugate Gradient Method - an overview

![PDF] Steepest Descent and Conjugate Gradient Methods with Variable Preconditioning](https://www.mdpi.com/mathematics/mathematics-11-02264/article_deploy/html/images/mathematics-11-02264-g001.png)

Mathematics, Free Full-Text

![PDF] Steepest Descent and Conjugate Gradient Methods with Variable Preconditioning](https://i1.rgstatic.net/publication/220493430_Algorithm_809_PREQN_Fortran_77_subroutines_for_preconditioning_the_conjugate_gradient_method/links/546ec9920cf2b5fc17607f32/largepreview.png)

PDF) Algorithm 809: PREQN: Fortran 77 subroutines for

Recomendado para você

-

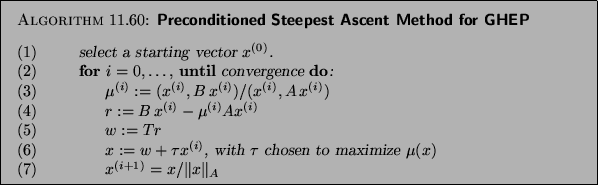

Preconditioned Steepest Ascent/Descent Methods19 abril 2025

Preconditioned Steepest Ascent/Descent Methods19 abril 2025 -

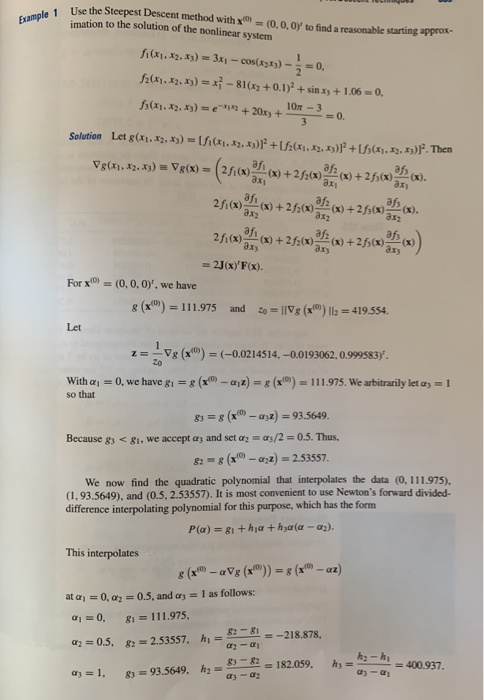

Solved (b) Consider the nonlinear system of equations z +19 abril 2025

Solved (b) Consider the nonlinear system of equations z +19 abril 2025 -

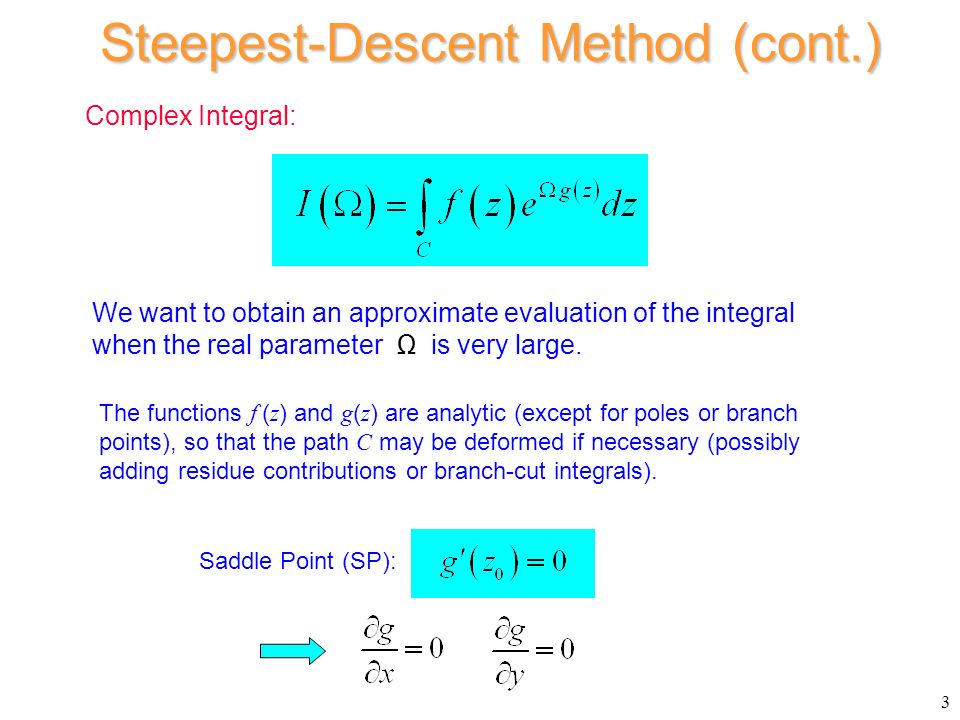

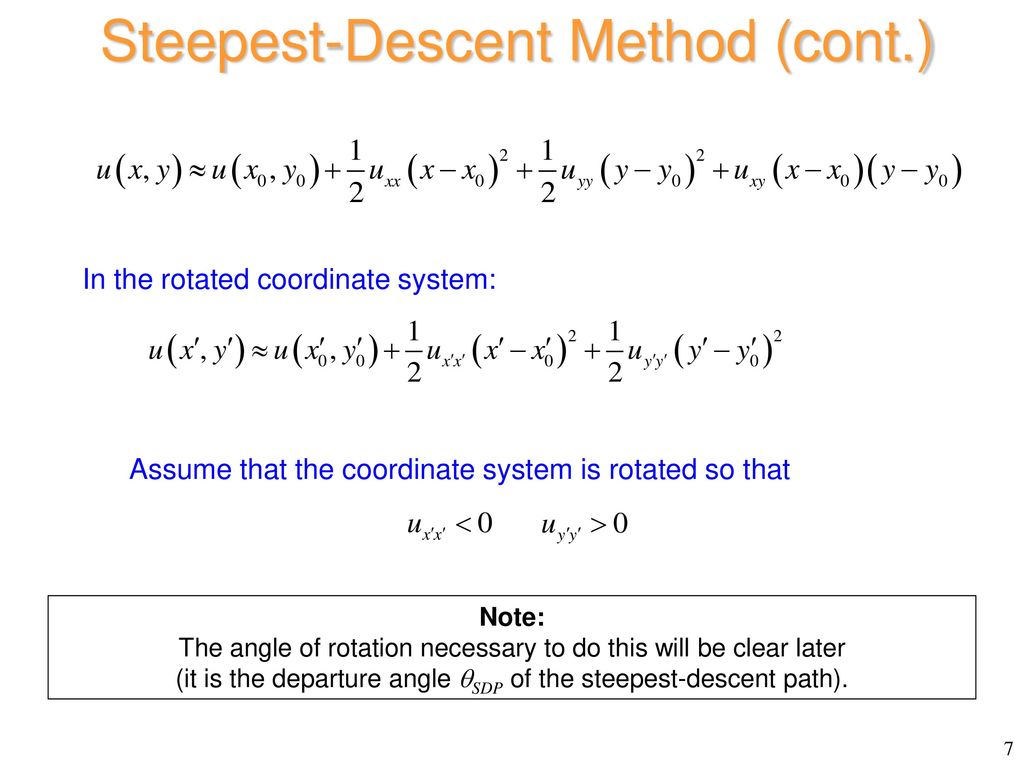

The Steepest-Descent Method - ppt video online download19 abril 2025

The Steepest-Descent Method - ppt video online download19 abril 2025 -

Conjugate gradient methods - Cornell University Computational Optimization Open Textbook - Optimization Wiki19 abril 2025

Conjugate gradient methods - Cornell University Computational Optimization Open Textbook - Optimization Wiki19 abril 2025 -

The Steepest-Descent Method - ppt download19 abril 2025

The Steepest-Descent Method - ppt download19 abril 2025 -

python - Steepest Descent Trace Behavior - Stack Overflow19 abril 2025

python - Steepest Descent Trace Behavior - Stack Overflow19 abril 2025 -

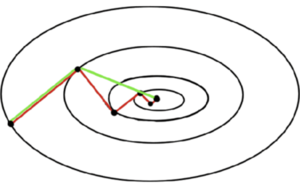

Curves of Steepest Descent for 3D Functions - Wolfram Demonstrations Project19 abril 2025

Curves of Steepest Descent for 3D Functions - Wolfram Demonstrations Project19 abril 2025 -

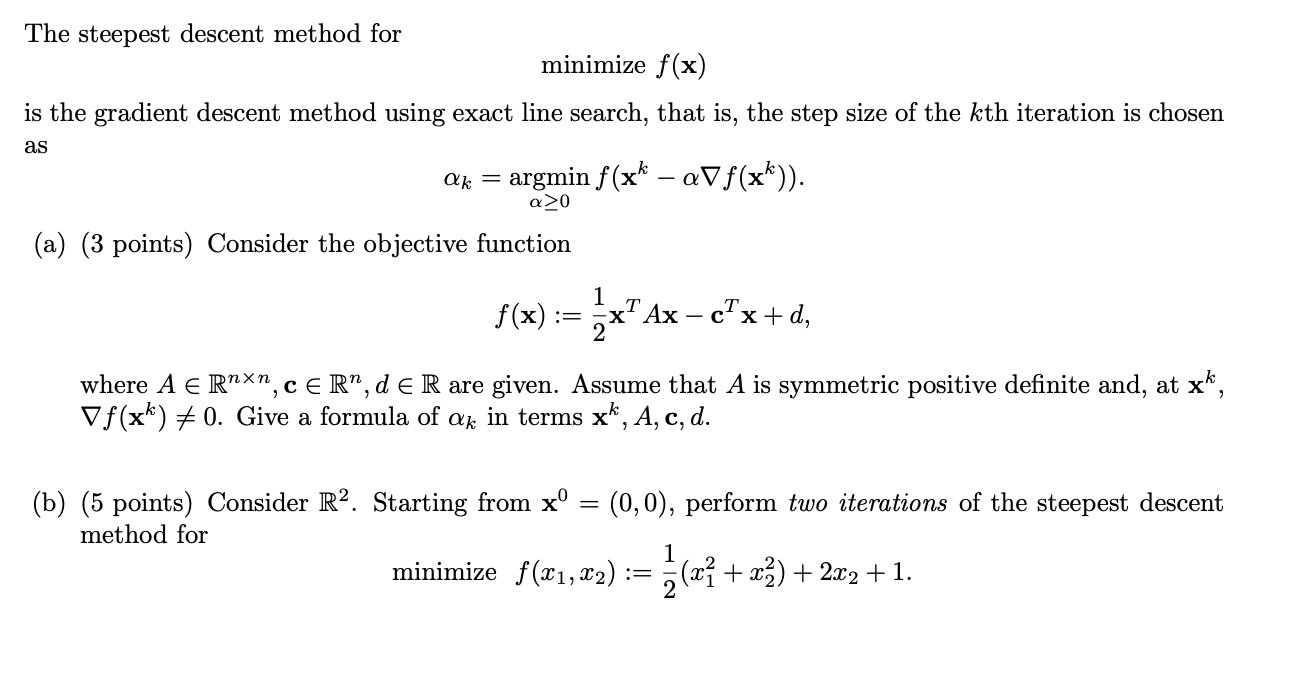

Solved The steepest descent method for minimize f(x) is the19 abril 2025

Solved The steepest descent method for minimize f(x) is the19 abril 2025 -

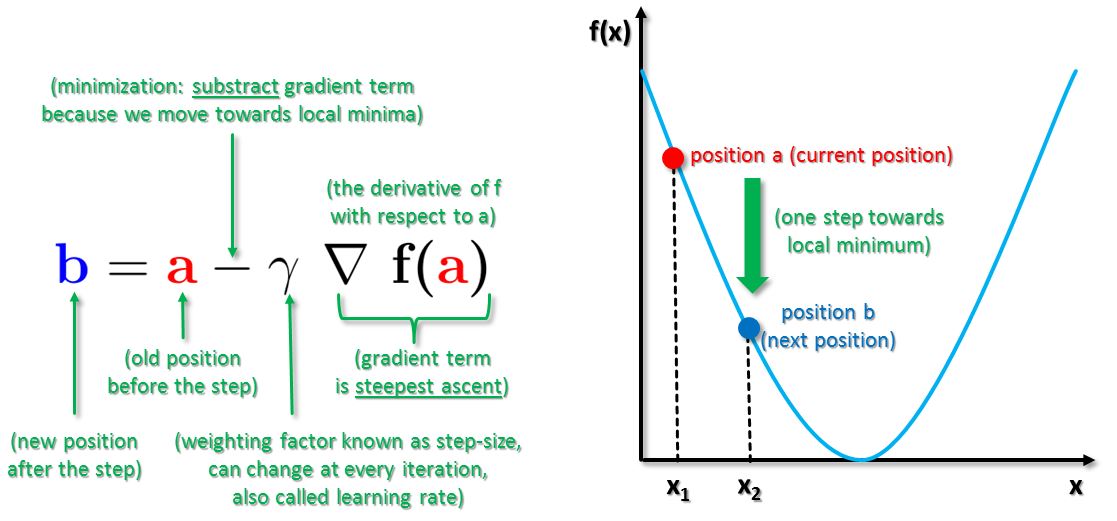

Gradient Descent Big Data Mining & Machine Learning19 abril 2025

Gradient Descent Big Data Mining & Machine Learning19 abril 2025 -

Solving unconstrained optimization problems using steepest descent algorithm : r/optimization19 abril 2025

Solving unconstrained optimization problems using steepest descent algorithm : r/optimization19 abril 2025

você pode gostar

-

Regras Do Xadrez, PDF, Xadrez19 abril 2025

-

Hutao Guide + INSANE F2P HUTAO TEAM COMP!!!19 abril 2025

Hutao Guide + INSANE F2P HUTAO TEAM COMP!!!19 abril 2025 -

Pokémon Mystery Power Cube Trading Card Game19 abril 2025

Pokémon Mystery Power Cube Trading Card Game19 abril 2025 -

JailbreaK - Roblox19 abril 2025

-

Rags To Riches S3E8 Going To Comic-Con19 abril 2025

Rags To Riches S3E8 Going To Comic-Con19 abril 2025 -

60 / Multiplier, The Rooms Ideas Wiki19 abril 2025

60 / Multiplier, The Rooms Ideas Wiki19 abril 2025 -

Tower Defense simulator new Prime Gaming Raven skin19 abril 2025

Tower Defense simulator new Prime Gaming Raven skin19 abril 2025 -

Resultados dos jogos de ontem da Uefa Champions League19 abril 2025

Resultados dos jogos de ontem da Uefa Champions League19 abril 2025 -

Governo autoriza realização de Concurso para o IGP-RS19 abril 2025

Governo autoriza realização de Concurso para o IGP-RS19 abril 2025 -

Quebra-cabeça do Papai Noel - Atividades Suzaninho Atividades natalinas educação infantil, Atividades natal educação infantil, Atividades de natal19 abril 2025

Quebra-cabeça do Papai Noel - Atividades Suzaninho Atividades natalinas educação infantil, Atividades natal educação infantil, Atividades de natal19 abril 2025