GSM8K Dataset Papers With Code

Por um escritor misterioso

Last updated 28 março 2025

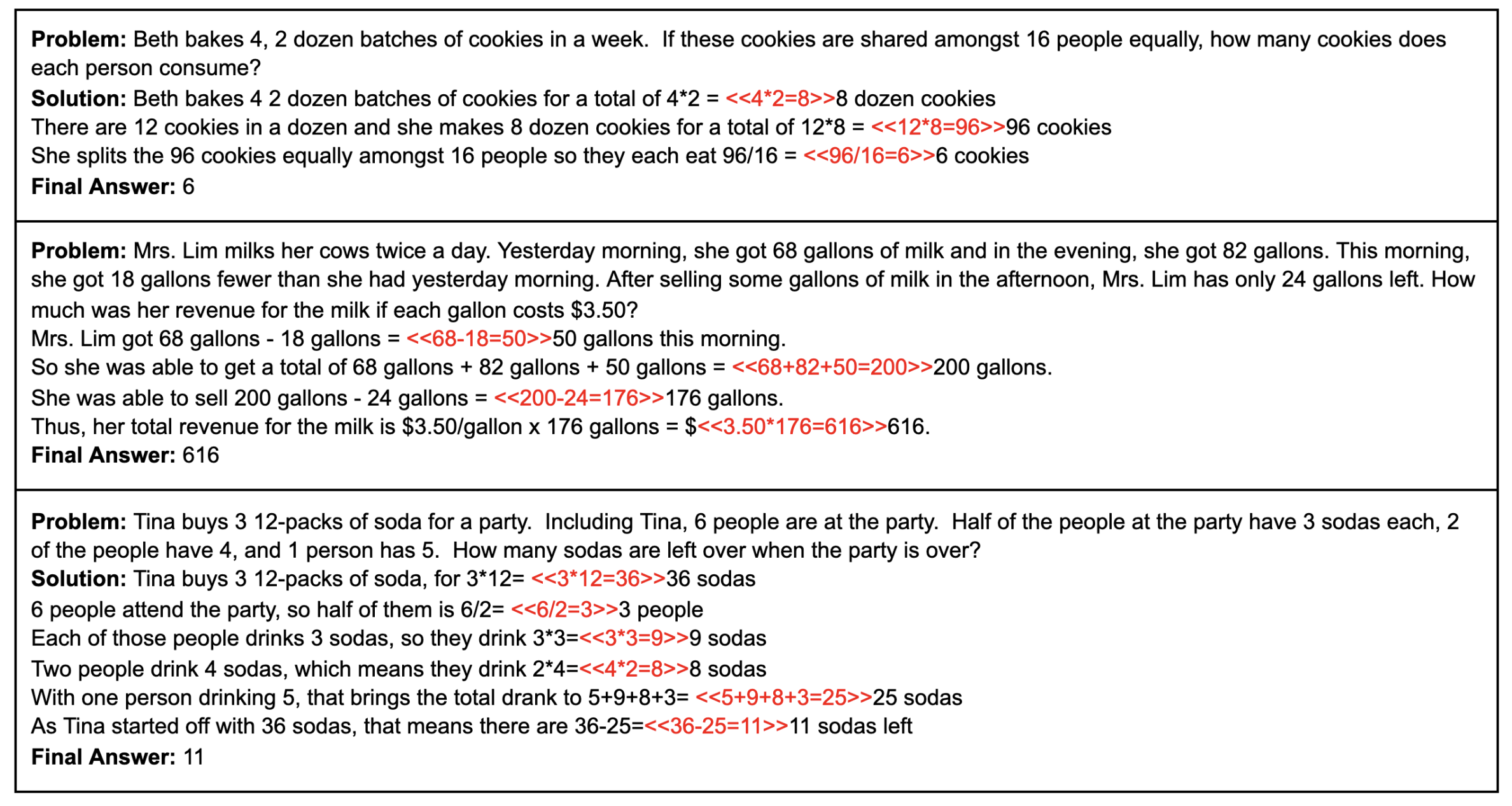

GSM8K is a dataset of 8.5K high quality linguistically diverse grade school math word problems created by human problem writers. The dataset is segmented into 7.5K training problems and 1K test problems. These problems take between 2 and 8 steps to solve, and solutions primarily involve performing a sequence of elementary calculations using basic arithmetic operations (+ − ×÷) to reach the final answer. A bright middle school student should be able to solve every problem. It can be used for multi-step mathematical reasoning.

PDF] Large Language Models are Better Reasoners with Self-Verification

How Surge AI Built OpenAI's GSM8K Dataset of 8,500 Math Problems

How Surge AI Built OpenAI's GSM8K Dataset of 8,500 Math Problems

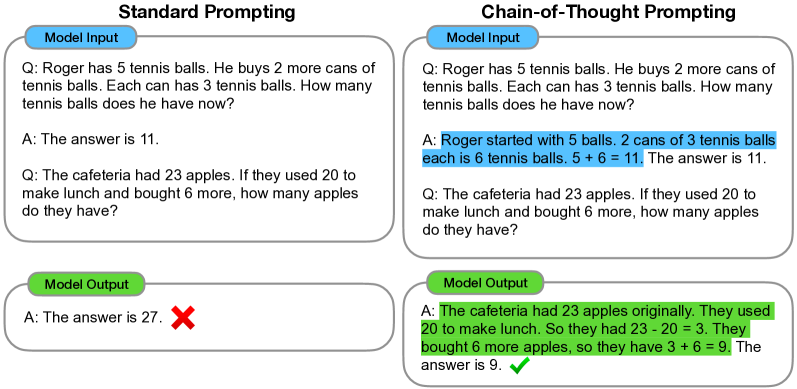

2201.11903] Chain-of-Thought Prompting Elicits Reasoning in Large Language Models

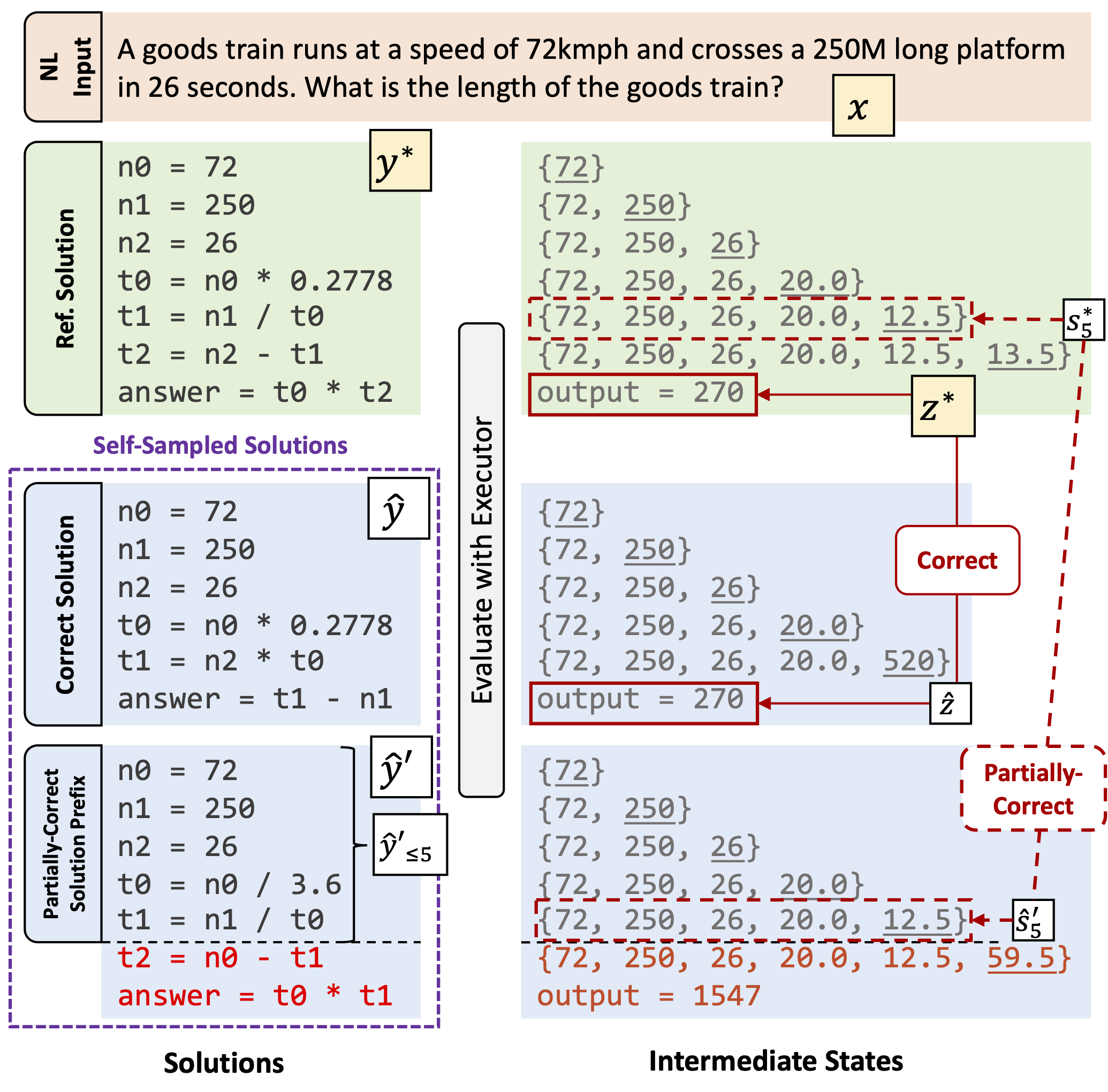

Learning Math Reasoning from Self-Sampled Correct and Partially-Correct Solutions

Darren Angle on LinkedIn: 3 quick prompt engineering tips for better outputs from large language…

How Surge AI Built OpenAI's GSM8K Dataset of 8,500 Math Problems

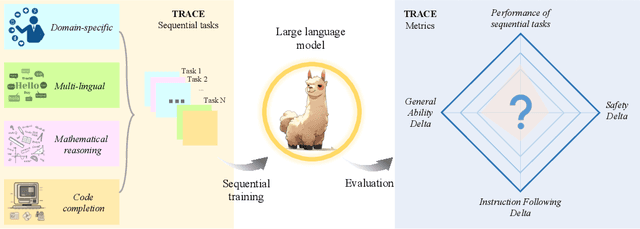

How Abilities in Large Language Models are Affected by Supervised Fine-tuning Data Composition – arXiv Vanity

Papers with Code

TinyGSM: achieving >80% on GSM8k with small language models

Yicheng Zou - CatalyzeX

How Surge AI Built OpenAI's GSM8K Dataset of 8,500 Math Problems

ToRA: a tool-integrated reasoning agent for mathematical problem solving, surpassing prior open source models on 10 mathematical reasoning datasets : r/LocalLLaMA

Phi-1.5: 41.4% HumanEval in 1.3B parameters (model download link in comments) : r/LocalLLaMA

Recomendado para você

-

Tay Training - A pergunta que eu mais recebo.. O que é28 março 2025

-

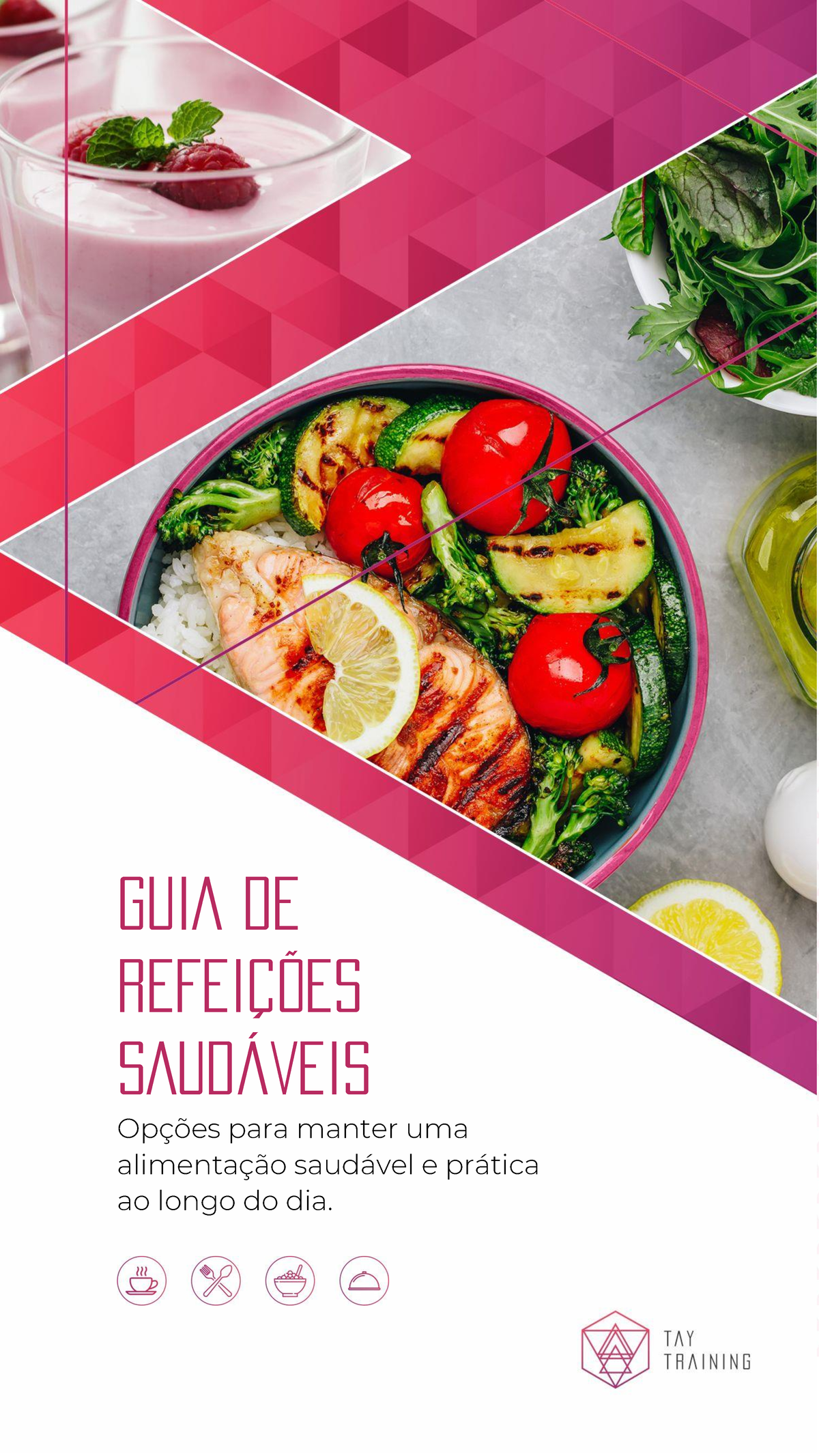

Guia Alimentar - Tay Training Desafio - GUIA DE REFEIÇÕES SAUDÁVEIS Opções para manter uma - Studocu28 março 2025

Guia Alimentar - Tay Training Desafio - GUIA DE REFEIÇÕES SAUDÁVEIS Opções para manter uma - Studocu28 março 2025 -

Guia Alimentar - Tay Training - Nutrição28 março 2025

Guia Alimentar - Tay Training - Nutrição28 março 2025 -

Trial of Training to Reduce Driver Inattention in Teens with ADHD28 março 2025

Trial of Training to Reduce Driver Inattention in Teens with ADHD28 março 2025 -

We Are Hiring Job Instagram Post28 março 2025

We Are Hiring Job Instagram Post28 março 2025 -

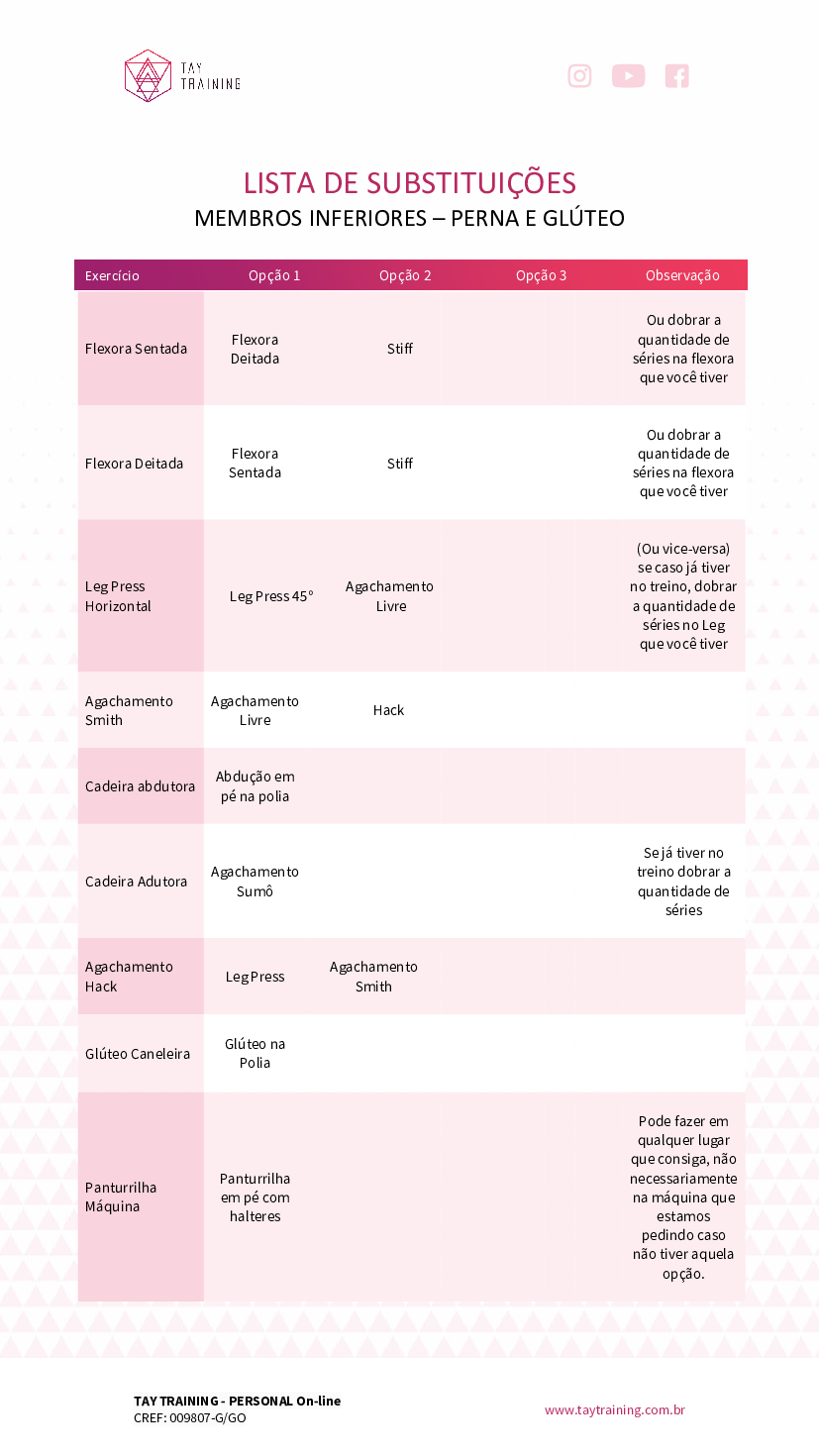

LISTA DE SUBSTITUIÇÕES - MEMBROS INFERIORES E SUPERIORES - Baixar28 março 2025

LISTA DE SUBSTITUIÇÕES - MEMBROS INFERIORES E SUPERIORES - Baixar28 março 2025 -

Book Section: Essay and Review: Delinquent Violent Youth28 março 2025

-

NAMI-OC Programs for Teens & Young Adults — NAMI Orange County28 março 2025

NAMI-OC Programs for Teens & Young Adults — NAMI Orange County28 março 2025 -

PFF 2021 NFL Draft Guide: PFF's top CB prospect, plus a wild-card28 março 2025

PFF 2021 NFL Draft Guide: PFF's top CB prospect, plus a wild-card28 março 2025 -

Tay Zar Win Khant - Mechanical Supervisor - Wilmar Myanmar28 março 2025

você pode gostar

-

Macacos em Marrocos28 março 2025

Macacos em Marrocos28 março 2025 -

Week 10 Connected Play: Online Games & Gaming, by GopherGirl199928 março 2025

Week 10 Connected Play: Online Games & Gaming, by GopherGirl199928 março 2025 -

CACTU-SAMA - Play Online for Free!28 março 2025

CACTU-SAMA - Play Online for Free!28 março 2025 -

Inosuke e Zenitsu para colorir28 março 2025

Inosuke e Zenitsu para colorir28 março 2025 -

Ecchi 1080P, 2K, 4K, 5K HD wallpapers free download28 março 2025

Ecchi 1080P, 2K, 4K, 5K HD wallpapers free download28 março 2025 -

Best Anime Like Hell's Paradise: Jigokuraku28 março 2025

Best Anime Like Hell's Paradise: Jigokuraku28 março 2025 -

Sonic Frontiers Mobile (Android) & iOS (iPhone, iPad) Release Date28 março 2025

Sonic Frontiers Mobile (Android) & iOS (iPhone, iPad) Release Date28 março 2025 -

Super Queijos - Super Pizza Pan28 março 2025

Super Queijos - Super Pizza Pan28 março 2025 -

Download Cheat Ucus98737 - Colaboratory28 março 2025

Download Cheat Ucus98737 - Colaboratory28 março 2025 -

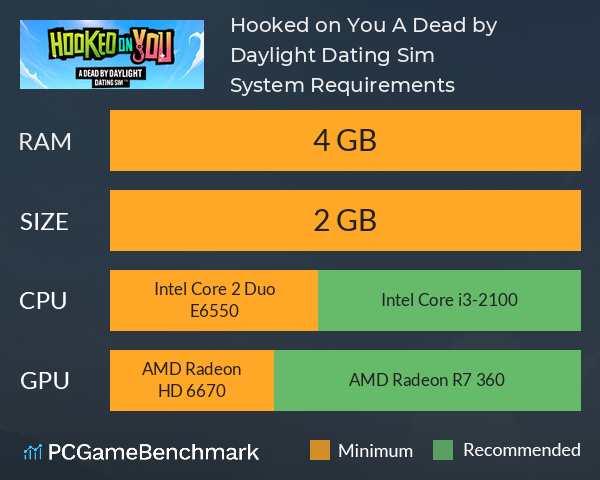

Hooked on You: A Dead by Daylight Dating Sim System Requirements - Can I Run It? - PCGameBenchmark28 março 2025

Hooked on You: A Dead by Daylight Dating Sim System Requirements - Can I Run It? - PCGameBenchmark28 março 2025