Chatbot Arena: Benchmarking LLMs in the Wild with Elo Ratings

Por um escritor misterioso

Last updated 30 março 2025

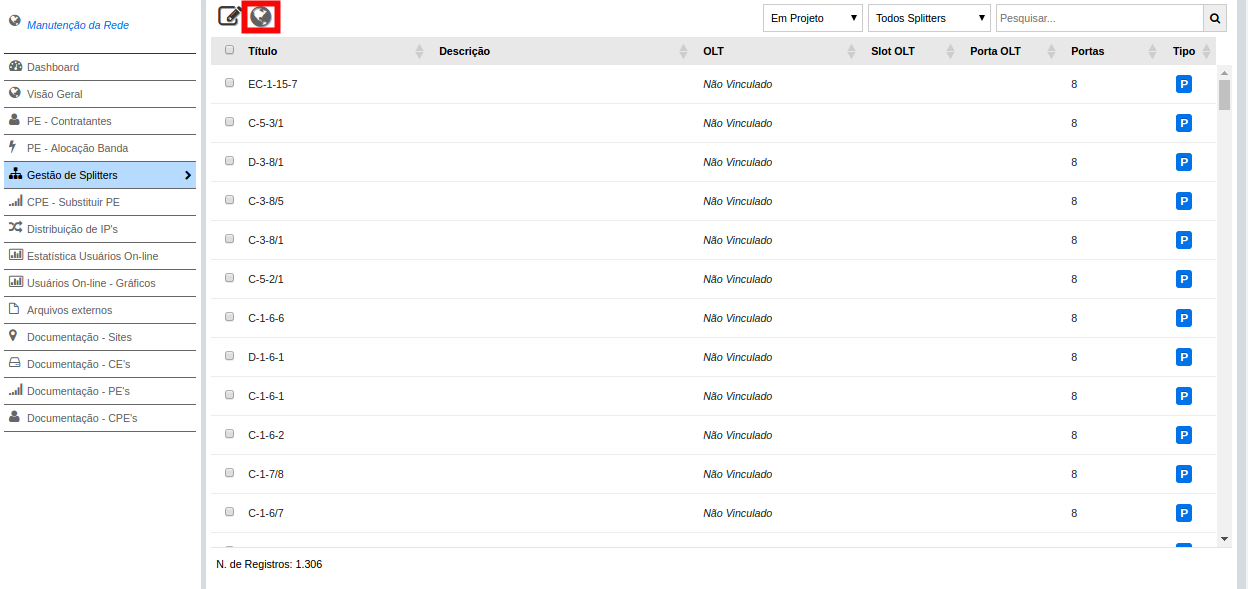

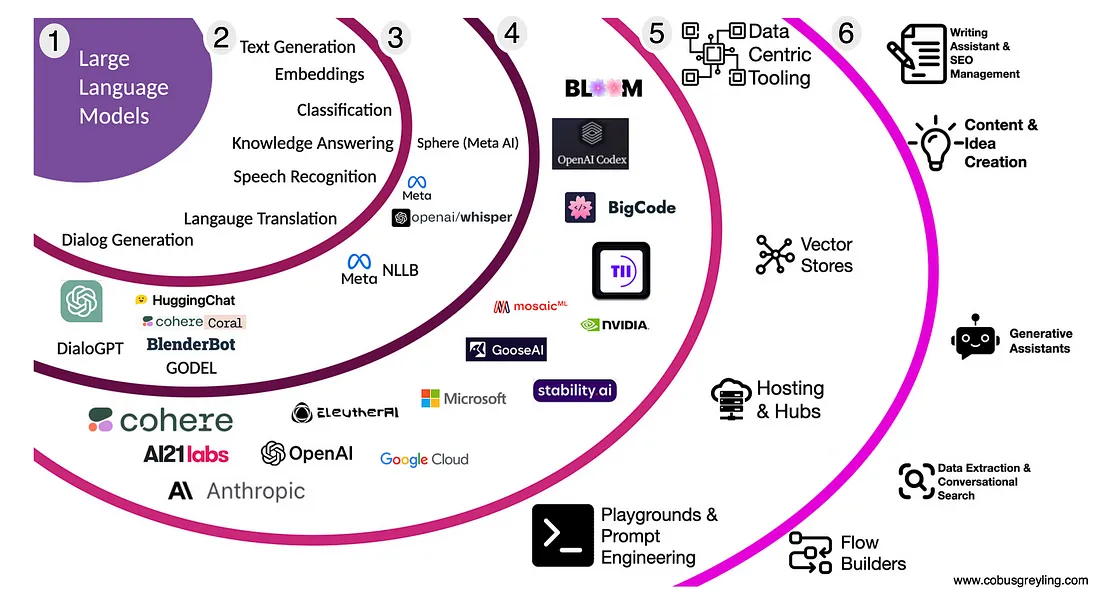

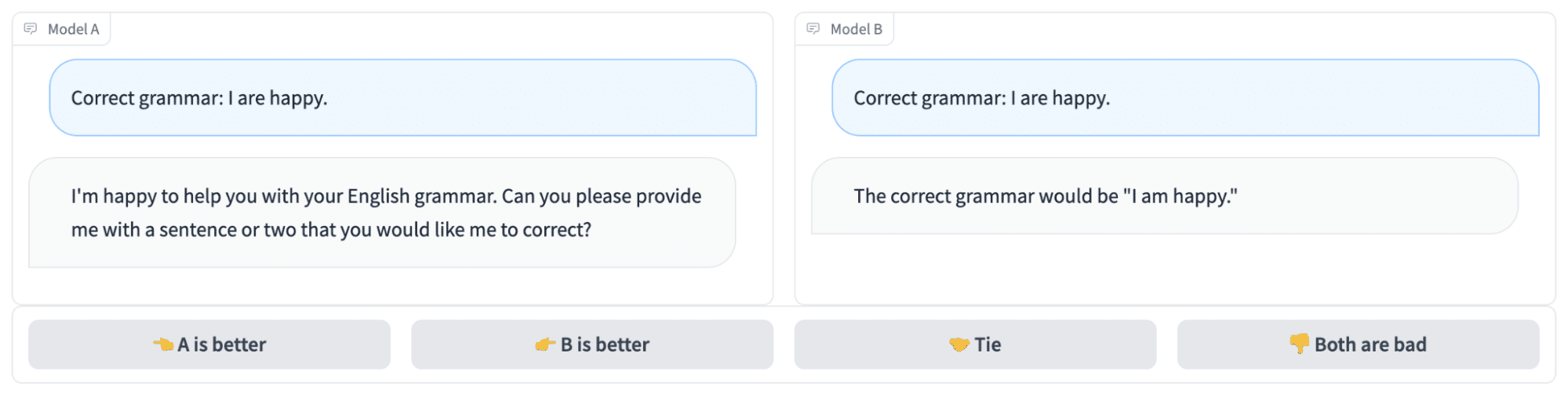

lt;p>We present Chatbot Arena, a benchmark platform for large language models (LLMs) that features anonymous, randomized battles in a crowdsourced manner. In t

Knowledge Zone AI and LLM Benchmarks

Knowledge Zone AI and LLM Benchmarks

PDF) PandaLM: An Automatic Evaluation Benchmark for LLM Instruction Tuning Optimization

Chatbot Arena - a Hugging Face Space by lmsys

Chatbot Arena - Eloを使用したLLMベンチマーク|npaka

Olexandr Prokhorenko on LinkedIn: Chatbot Arena: Benchmarking LLMs in the Wild with Elo Ratings

商用LLMに肉薄する「vicuna-33b-v1.3」と、チャットLLM用のベンチマーク手法の話題|はまち

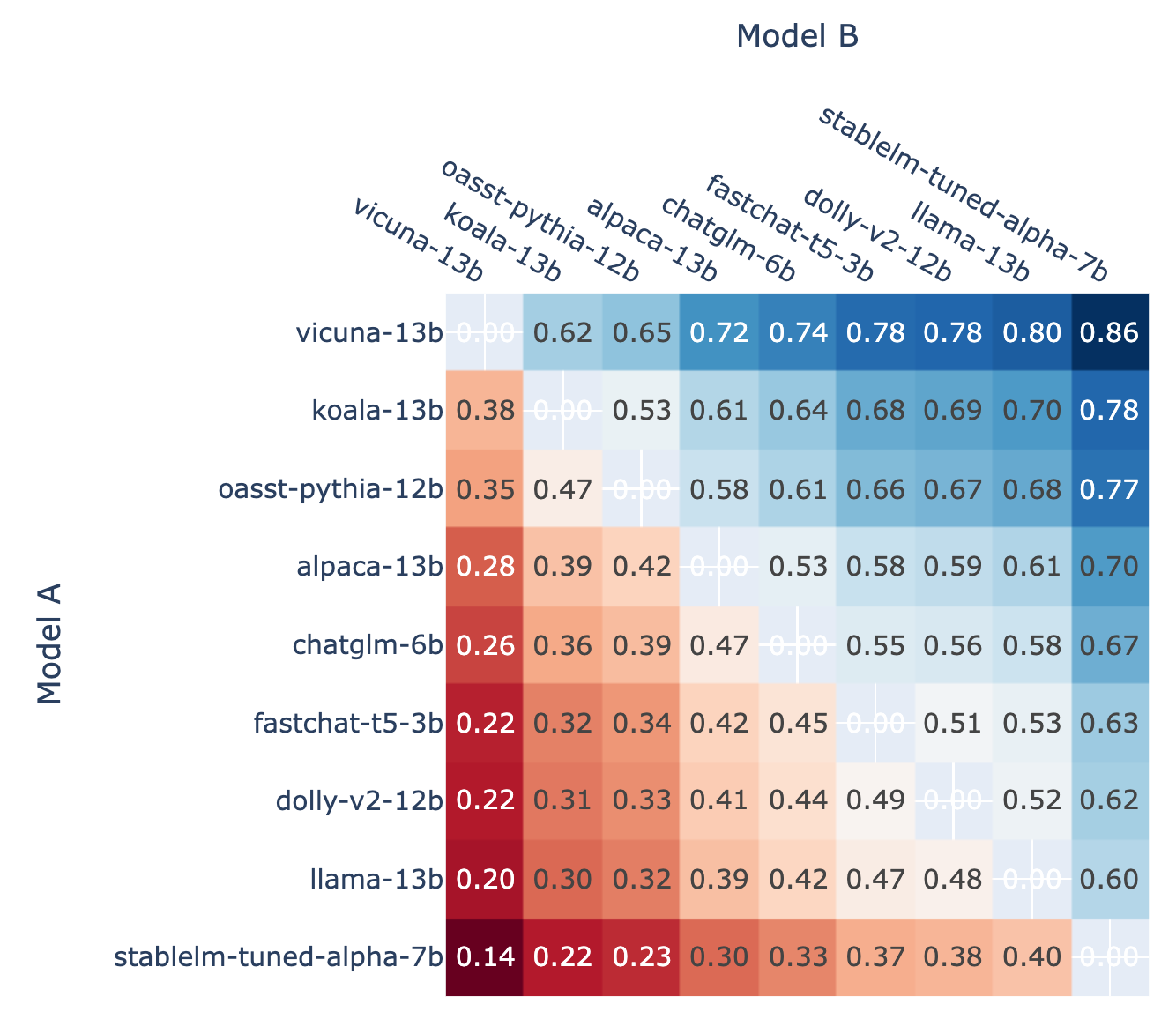

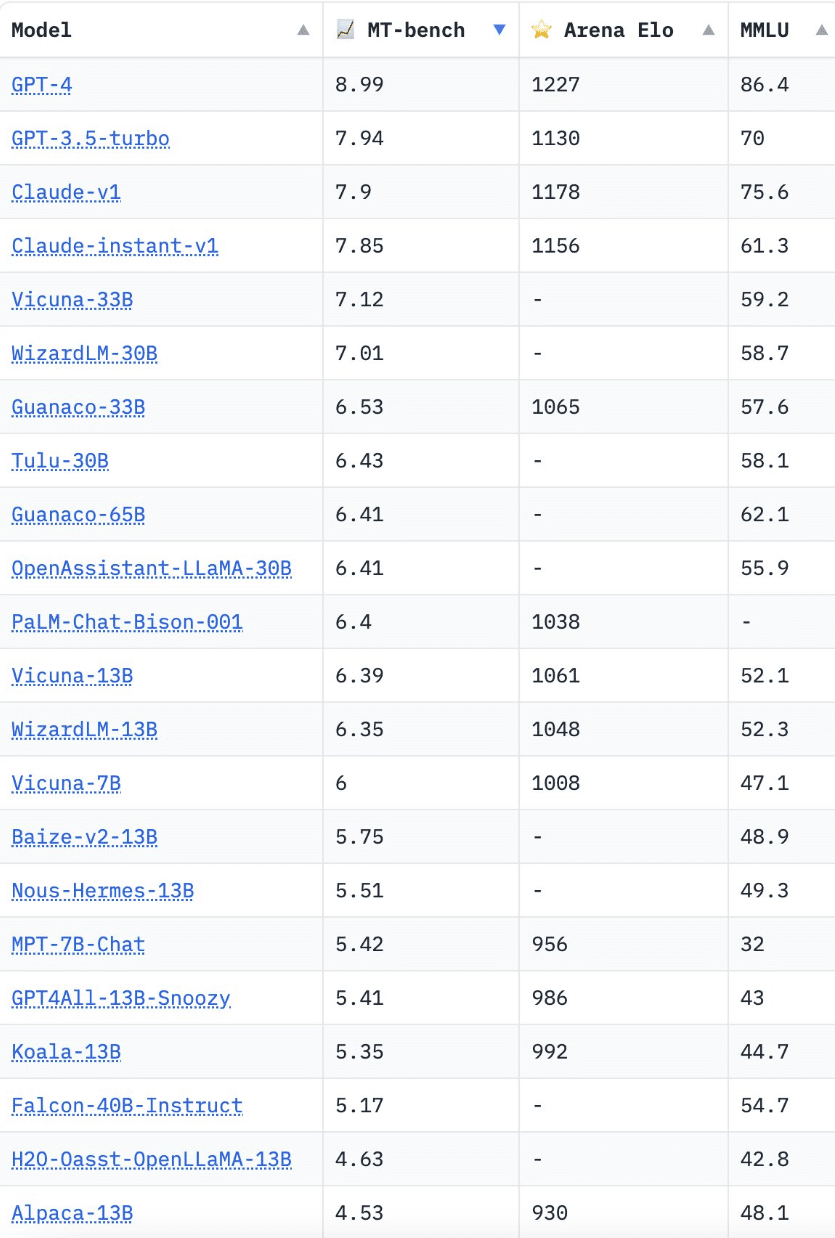

Large Language Model Evaluation in 2023: 5 Methods

LMSYS Org Releases Chatbot Arena and LLM Evaluation Datasets

LLM Benchmarking: How to Evaluate Language Model Performance, by Luv Bansal, MLearning.ai, Nov, 2023

Vinija's Notes • Primers • Overview of Large Language Models

Recomendado para você

-

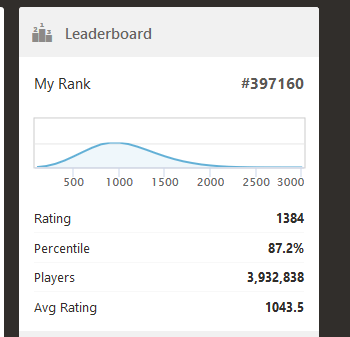

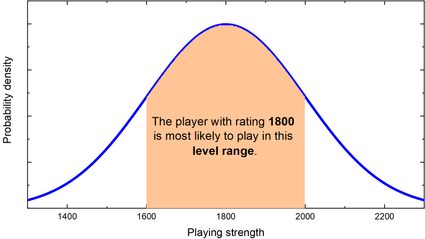

Rating distribution graph - Chess Forums30 março 2025

Rating distribution graph - Chess Forums30 março 2025 -

Guess the Elo 2.0 - Test Your Chess Rating — Eightify30 março 2025

Guess the Elo 2.0 - Test Your Chess Rating — Eightify30 março 2025 -

Elo Calculator Elo Rating Calculator30 março 2025

Elo Calculator Elo Rating Calculator30 março 2025 -

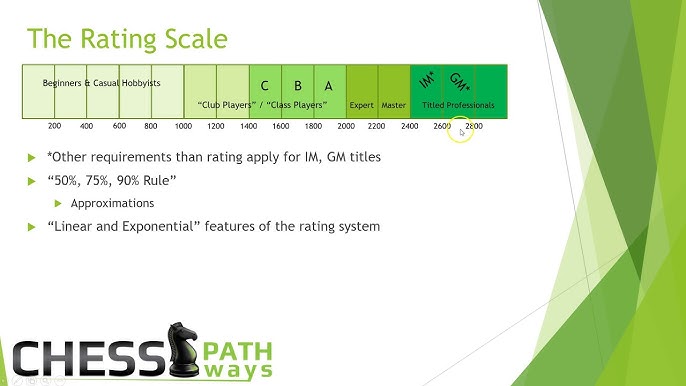

The Elo Rating System for Chess and Beyond30 março 2025

The Elo Rating System for Chess and Beyond30 março 2025 -

Elo Rating System - Everything You Need to Know30 março 2025

Elo Rating System - Everything You Need to Know30 março 2025 -

PDF) Does chess need intelligence? — A study with young chess players30 março 2025

PDF) Does chess need intelligence? — A study with young chess players30 março 2025 -

What chess level is my child?30 março 2025

What chess level is my child?30 março 2025 -

The Russian Endgame Handbook: Don't Turn Chess Wins into Draws - SparkChess30 março 2025

The Russian Endgame Handbook: Don't Turn Chess Wins into Draws - SparkChess30 março 2025 -

SPACIOUS-MIND – RATE YOUR SKILL IN CHESS TESTS. - ppt download30 março 2025

SPACIOUS-MIND – RATE YOUR SKILL IN CHESS TESTS. - ppt download30 março 2025 -

🧩 Chess Daily Puzzle 🧩 🔍 Difficulty: 2281 ELO Rating 🔍 🔶 White to play and gain an advantage in just 2 moves! 🔶 🌟 Test your chess skills…30 março 2025

você pode gostar

-

Hasbro My Little Pony: Uma Nova Geração Gerações Brilhantes30 março 2025

Hasbro My Little Pony: Uma Nova Geração Gerações Brilhantes30 março 2025 -

Nightstalkers #3 VF ; Marvel, Blade Vampire Hunter30 março 2025

Nightstalkers #3 VF ; Marvel, Blade Vampire Hunter30 março 2025 -

Menina de 12 anos desaparece após sair de casa sem informar30 março 2025

Menina de 12 anos desaparece após sair de casa sem informar30 março 2025 -

Como desenhar as frutas do blox fruit30 março 2025

Como desenhar as frutas do blox fruit30 março 2025 -

Anime Mini-Review: The Junji Ito Collection, Episodes 4, 5 and 6 – The Otaku-Don30 março 2025

Anime Mini-Review: The Junji Ito Collection, Episodes 4, 5 and 6 – The Otaku-Don30 março 2025 -

![Image - 449811], SCP Foundation](https://i.kym-cdn.com/photos/images/original/000/449/811/171.jpg) Image - 449811], SCP Foundation30 março 2025

Image - 449811], SCP Foundation30 março 2025 -

How to grow a beard for the first time30 março 2025

How to grow a beard for the first time30 março 2025 -

DEPOIS Minecraft, mas com gráficos de VIDA REAL (ultra realista melhor que RTX) Athos - 1,1 mi de visualizações - há 7 meses - iFunny Brazil30 março 2025

DEPOIS Minecraft, mas com gráficos de VIDA REAL (ultra realista melhor que RTX) Athos - 1,1 mi de visualizações - há 7 meses - iFunny Brazil30 março 2025 -

Integração com GeoGridMaps - Grupo Voalle30 março 2025

-

Part 3: Shiny Galarian Moltres Giveaway (END)30 março 2025

Part 3: Shiny Galarian Moltres Giveaway (END)30 março 2025